ChatGPT could be partners in education if we overcome these challenges

Academics Wong Lung Hsiang and Looi Chee Kit note that information and AI literacies are or will become essential for anyone living in the IT era. One must possess both literacies to responsibly and constructively produce and disseminate information, as well as to understand and appraise the functions and limitations of AI tools, and the challenges they pose.

The advent of the artificial intelligence (AI) chatbot, ChatGPT, sparked a flurry of debates among educators and was even discussed in a recent parliamentary session in Singapore. ChatGPT (a tool that produces text based on requirements that users input in text format) is but the tip of the iceberg of AI content generation tools that could impact education.

Generative AI tools include those that produce images, videos, sound clips and even computer programmes based on text-format user requirements. It is even possible to produce texts based on user-input images. Does it worry you to know that AI can seemingly help a student complete any school assignment?

AI as partners

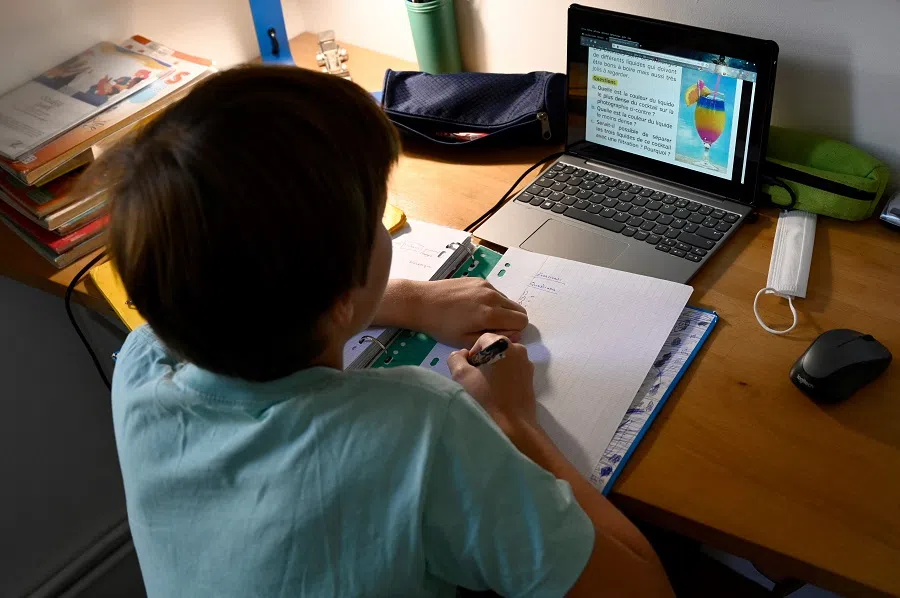

Revolutionary technologies inevitably draw the public eye when they first appear. Just less than two decades ago, people were worried that the Internet would erode the status of teachers and textbooks as keepers of knowledge, especially when search engine usage became increasingly commonplace. Today, students participate in online learning and learn how to integrate online information in schools. In fact, the Internet has become a powerful tool to circumvent the restrictions of classroom time and help students become independent learners and critical thinkers.

But we have long entered the information age and the philosophy of education has to be remodelled to focus on cultivating students, teachers and employees to treat technology as partners.

ChatGPT has drawn the scepticism of educators as it seemingly challenges the way of teaching, learning and assessment that many of us take for granted and which can be traced back several centuries.

But we have long entered the information age and the philosophy of education has to be remodelled to focus on cultivating students, teachers and employees to treat technology as partners. Therefore, unlike other commentaries that focus on the specifics, like how to prevent students from using ChatGPT to cheat or providing teaching tips, we wish to discuss information and AI literacies, and how humans and machines can become smart partners.

Information and AI literacies are or will become essential for anyone living in the IT era. Information literacy is the ability to obtain, critically evaluate and integrate information. It is also about responsibly and constructively producing and disseminating information (whether in online, digital or traditional print media form).

Meanwhile, AI literacy is about understanding and appraising the functions and limitations of AI tools, and the challenges they pose. It is also about knowing how to responsibly use AI to augment decision-making, assist us with a myriad of tasks, improve our quality of living and more.

More importantly, beyond the skills aspect, both literacies also encompass a holistic view of human and AI collaboration, as well as the relevant moral and societal values, for example the responsible usage mentioned earlier.

In Singapore, the omnipresence of the Internet means that information literacy is already a mainstay. The government's digital media and information literacy framework has been incorporated into the secondary school curriculum, while the National Library Board organises regular events to raise public awareness.

The pervasiveness of fake news and online scams means that fact-checking is a core competency in information literacy. However, what many of us may not realise is that in our journey to becoming a smart nation, there will be an increasing number of AI tools deployed around us. By the time the next generation enters the workforce, AI applications will be even more commonplace. Therefore, the government's AI Singapore programme that drives AI development is also promoting AI literacy.

Inculcating information and AI literacies

Some academics proposed the usage of ChatGPT (or other AI content generation tools) to inculcate information and AI literacies in students. While such tools are not the only means to do so, they stand out in terms of being easy to pick up, interesting to use (thus sparking learning interest), and applicable to almost every subject. More importantly, despite the seemingly unbounded intelligence and talents of these tools, they have innate limitations and deficiencies which in turn can provide opportunities for creative pedagogies.

For instance, some people have suggested that teachers instruct students to use ChatGPT to produce a passage similar in content to that used in a lesson. Following this, the students have to go online to search for the sources of the arguments made before identifying the biases and correcting the factual errors in the text.

To teachers and more advanced students, the works produced by the current crop of AI content generation tools are no better than drafts and still leave much room for improvement.

The students can also be asked to critique the content and writing style. Individually, students with views that differ from those in the text can debate them with the chatbot. Such activities kill two birds with one stone in that lesson content is enriched and at the same time, students deepen their information literacy, which allows them to become more discerning of fake information online.

To teachers and more advanced students, the works produced by the current crop of AI content generation tools are no better than drafts and still leave much room for improvement. For example, besides rectifying the mistakes therein, there may also be a need to reorganise the content, add or delete arguments, and introduce real-life examples. The advantage of using such tools is that there is no need for teachers and students to start writing from scratch, since the AI has carried out a preliminary selection of the content. This leaves the writer with more time to organise their thoughts before amending the draft to elucidate these thoughts.

Alternatively, the writer can also refine their viewpoints while amending the piece. Splitting the task in such a manner allows humans and machines to form a smart partnership, and in doing so, AI is used to augment instead of replace human intelligence. The caveat is that students need to be proficient in writing an essay from scratch before using ChatGPT in this manner, otherwise they will find it challenging to improve upon the drafts produced by the chatbot.

To obtain high-quality and relevant output, the right or high-quality questions in the given context have to be asked.

Asking the right questions

In our article on the applications of ChatGPT in education published by Lianhe Zaobao in January, we proposed that it is more important for the 21st century learner to ask the right questions rather than be able to answer questions. This is also pertinent to information and AI literacies.

In our context, asking the right questions refers to crafting key phrases into search engines, enquiring ChatGPT in the right manner, or issuing the appropriate commands to AI tools. To obtain high-quality and relevant output, the right or high-quality questions in the given context have to be asked. Similarly, for an AI tool to perform optimally and up to expectations, the most favourable conditions must be present.

Here is a battlefield example to illustrate the importance of asking the right questions. A military commander who does not know how to ask the right questions will not know where to send his scouts and what he wants them to look out for. Hence, he will not be able to accurately assess the situation.

In addition, if the commander does not know how to deploy his troops or how to be flexible, his forces may be wiped out despite being sizeable and well-trained. In this context, the concept of a smart partnership refers to having the machine play the roles of advisor, scout and vanguard, while the human is the commander who deciphers the intelligence and is an independent decision-maker.

An oft-discussed classroom activity involves having students come up with their own questions on a particular topic to ask ChatGPT. The students will then present their findings and compare the answers they obtained in order to deepen their knowledge of the topic.

To raise information and AI literacies, we propose the following extension to the exercise: the students will subsequently have to select the most relevant or comprehensive answer before going on to deduce or reflect on the line of questioning it takes to obtain such answers.

... the moral issues arising from the development (if the students possess such expertise) and usage of AI tools are part of AI literacy.

Considering moral issues

Information literacy is also about the responsible production and dissemination of information that is authentic and free from bias. Prior to dissemination, it is necessary to weigh the potential impact on society or on the parties involved. For instance, AI content generation tools can be used to produce deepfake videos. Teachers and students can discuss the consequences of posting online such potentially problematic content generated by AI tools. Likewise, the moral issues arising from the development (if the students possess such expertise) and usage of AI tools are part of AI literacy. Reflecting on such issues provides comprehensive training to gain AI literacy.

We certainly understand the concerns that some people have for ChatGPT. For decades, academics in the field of education technology have been advocating for the low-level, repetitive and memory-based grunt work in education to be handled by computers so that teachers and students have more time to think and complete higher-level educational tasks. But AI content generation tools such as ChatGPT are capable of more than just grunt work and this renders obsolete certain skills that students had to learn.

... it also means that students now require more advanced skills (in order to get the most out of such tools) and one cannot help but wonder whether this would result in a new digital divide in information or AI literacy.

At the same time, it also means that students now require more advanced skills (in order to get the most out of such tools) and one cannot help but wonder whether this would result in a new digital divide in information or AI literacy.

For example, an article produced by ChatGPT may be inferior to what a more advanced student is capable of writing. This would prompt the student to edit the piece, leading to more in-depth learning and thinking. However, less advanced students may feel that since they are unable to write better than ChatGPT, they might as well take the easy way and get it to do their homework.

Hence, when it comes to the application of ChatGPT in education, it is also necessary to separately explore the topic of differentiated instructions, that is tailor-made AI-assisted learning approaches for students according to their abilities.

This article was first published in Lianhe Zaobao as "君子生非异,善假于物也 ──信息素养和AI素养的培养".

![[Big read] When the Arctic opens, what happens to Singapore?](https://cassette.sphdigital.com.sg/image/thinkchina/da65edebca34645c711c55e83e9877109b3c53847ebb1305573974651df1d13a)

![[Video] George Yeo: America’s deep pain — and why China won’t colonise](https://cassette.sphdigital.com.sg/image/thinkchina/15083e45d96c12390bdea6af2daf19fd9fcd875aa44a0f92796f34e3dad561cc)