Too early to celebrate successes of AI video in China

Although AI-powered video generation tools are becoming one of the hottest investments for China’s big tech companies, they are still some ways off from being able to produce a coherent feature-length film with the click of a button.

(By Caixin journalists Guan Cong and Ding Yi)

Video generation tools powered by artificial intelligence (AI) have become one of the hottest investments for China’s big tech companies as they look to broaden their revenue streams.

Since ChatGPT developer OpenAI surprised the world with its text-to-video model Sora in February 2024, Chinese companies have rapidly rolled out similar tools that have been used to make short films and video series.

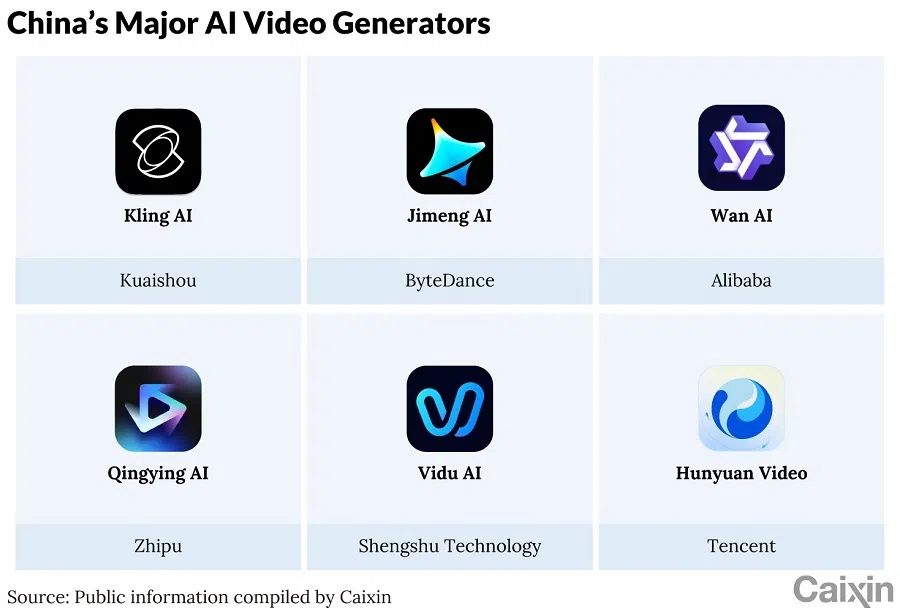

While Kuaishou and ByteDance have each begun charging users for subscriptions to Kling AI and Jimeng AI ... they have yet to prove the tools can revolutionise film-making or other industries due to its limited capabilities.

In June 2024, Beijing-based short-video specialist Kuaishou Technology launched its text-to-video model, Kling AI. The company said it has upgraded the tool more than 20 times since, touting improvements to motion accuracy, composition, colour tone and how well it understands user text prompts. One month later, TikTok-owner ByteDance Ltd. unveiled its AI-powered video generation app, Jimeng AI. Both tools can create short-format, high-definition video clips from written prompts or images.

While Kuaishou and ByteDance have each begun charging users for subscriptions to Kling AI and Jimeng AI, aiming to cash in on the technology that is getting a lot of hype, they have yet to prove the tools can revolutionise film-making or other industries due to its limited capabilities.

Growth in AI video

Unlike much of the tech world, Kuaishou has not focused on developing a chatbot. It has developed large language models (LLMs) — the technology that underpins advanced chatbots like ChatGPT — but it uses them for its eponymous short video app and some of its other businesses such as advertising. The company believes that LLMs like generative pre-training transformers have limited reasoning capabilities, so their path toward artificial general intelligence will be challenging, according to Gai Kun, Kaishou’s senior vice-president in public remarks made late last year.

In addition, Kuaishou’s LLMs have not met its expectations as a viable business due to their limited capabilities. These factors have led Kuaishou to see a growth opportunity in AI video generation, according to Gai.

Hence, Kuaishou has made immense investments in developing foundational large models for video generation. Over the past two years, the company has built a cluster of computing power to help train its large models, which have trillions of parameters, the company said in a press release in August alongside its half year results. The company on 29 May released Kling AI’s 2.1 version, which can generate five-second videos at a 1080p resolution.

Kling AI has generated more than 150 million RMB (US$20.8 million) in revenue in the first quarter of 2025, Kuaishou said in an earnings report released Tuesday, with the company saying that the new business initiative has been “demonstrating robust momentum as the second growth curve”.

In March, Jimeng AI had 8.93 million monthly active users and Kling AI had 1.8 million, according to data from market research firm QuestMobile.

ByteDance also sees video generation software as one of its most important businesses. In early 2024, Zhang Nan resigned as the CEO of the division running Douyin — TikTok’s sister app in China — to lead several AI projects at the company’s video-editing unit Jianying.

After taking on the new role, Zhang received greater access to computational resources to develop Jimeng AI, which Zhang said is inspired by OpenAI’s model Dall-E 2. That model can create images from a wide range of text descriptions.

In September 2024, ByteDance launched two video generation models — PixelDance and Seaweed — which were open to Jimeng AI users. The five video generation models that have been integrated into Jimeng AI were developed by ByteDance’s Seed department. The department has been given responsibility for allocating resources to develop large models that can be used both within the company and by external corporate users.

This March, ByteDance incorporated DeepSeek’s R1 reasoning model into Jimeng AI to help the latter’s users to write more detailed and descriptive text prompts.

In March, Jimeng AI had 8.93 million monthly active users and Kling AI had 1.8 million, according to data from market research firm QuestMobile.

Wei Lian, the director of the fully AI-generated horror video series Muye Guishi, said that different models have different advantages. For example, Kling AI is better suited for fine-tuning AI character performances due to its exacting control over micro expressions, while Jimeng AI is good at addressing issues with scene continuity and character movement, he said.

Using Kling AI to turn images to a one-minute video clip cost roughly 270 RMB to 530 RMB in credits based on the model’s basic monthly subscription option.

Cashing in on creators

In March, filmmaker PJ Ace posted online an AI-produced video that recreated the original trailer from Lord of the Rings: The Fellowship of the Ring as if it were animated by the famed Japanese animation house Studio Ghibli, known for its hand-drawn and whimsical style. The AI filmmaker said he created the video using several tools including Sora and Kling AI. The project cost him $250 in Kling credits and nine hours of his time re-editing the trailer.

Wei said that Muye Guishi contained many complex action sequences. Using Kling AI to turn images to a one-minute video clip cost roughly 270 RMB to 530 RMB in credits based on the model’s basic monthly subscription option.

Soon after the launches of Kling AI and Jimeng AI, Kuaishou and ByteDance launched tiered subscription systems, with higher, more expensive tiers giving users more credits to generate videos.

To further expand its users base, Kling AI launched a “future partners plan” in October, which aims to help AI video creators win contracts from brands, organisations and companies.

In March this year, Jimeng AI worked with Douyin to launch a campaign that gave away Jimeng credits to encourage creators to make AI-generated micro dramas. One group of creators on Douyin’s video sharing platform created a series featuring AI-generated cat character doing things like shopping and cooking. The videos drew enough of a fanbase for some of their creators to start a livestreaming e-commerce business, from which Douyin gets a cut of every sale.

Despite the impressive output of AI-powered video generators, they are still some ways off from being able to produce a coherent feature-length film with the click of a button.

Generator shortcomings

Despite the impressive output of AI-powered video generators, they are still some ways off from being able to produce a coherent feature-length film with the click of a button.

Currently, producing an AI film or long-form video series usually requires generating images first using text-to-image tools and then generating videos from those images via image-to-video software. Along the way, creators have to use many other tools like photo editing software Photoshop.

Wei said that he used a variety of video generation tools to make Muye Guishi, including Jimeng AI, Kling AI, PixVerse and Pika AI, because each tool had its own unique traits. The use of different tools is a sign that the technology behind any AI-powered video generator is not strong enough to take a dominant position in filmmaking.

Many market insiders expect progress will come with the development of multimodal models that can process and integrate multiple types of data including text, images, audio and video to generate more complex and realistic videos, scriptwriter Zhu Shang said.

This article was first published by Caixin Global as “In Depth: AI Video Is Becoming a Sector to Watch in China, but Don’t Get Out the Popcorn Yet”. Caixin Global is one of the most respected sources for macroeconomic, financial and business news and information about China.