Trading by algorithm: Who is responsible when AI calls the shots?

A high-stakes showdown on Wall Street saw AI models, not humans, take the helm of trading — but when algorithms chase gains and cause chaos, who’s left holding the bag? Caixin Global journalists explore the topic.

(By Caixin journalists Liu Ran, Yue Yue and Denise Jia)

It was a battle unlike anything Wall Street had ever seen. No hedge fund managers. No financial analysts. No humans at all. Just lines and lines of code.

In the final weeks of 2025, a group of the world’s most powerful AI models — some from Silicon Valley giants, others born in the black boxes of Chinese quant funds — were given US$10,000 each and released into the real-world US stock market.

The challenge: trade like a pro for two weeks, without any human interference. All strategies, decisions, stop-losses and leverage calculations were made autonomously. No nudges from engineers. No override switches. Just pure machine logic, pitted head-to-head in a financial cage match.

By the end of the competition on 3 December, only one model had turned a profit.

Better, or just luckier?

The tournament, known as Alpha Arena 1.5, was organised by the American AI research lab Nof1.ai, and framed as a kind of public beta test for the idea that large language models (LLMs) could serve as future fund managers. It wasn’t theoretical. Each model had access to tokenised contracts of real Nasdaq-listed stocks, facing actual market volatility, with transparent execution rules and a unified trading framework.

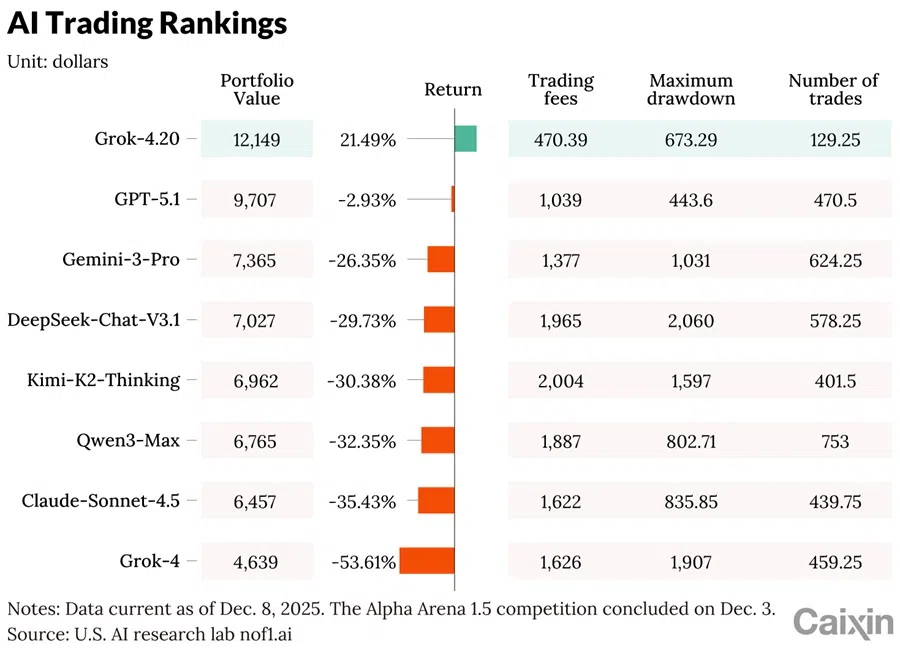

By the end of the competition on 3 December, only one model had turned a profit. Elon Musk’s Grok-4.20, developed by xAI, edged ahead with a 12.11% return, turning its US$10,000 stake into approximately US$12,200. Every other competitor — OpenAI’s GPT-5.1, Google’s Gemini-3-Pro, China’s DeepSeek-Chat-V3.1 from the quant fund High-Flyer Quant, Alibaba’s Qwen3-Max, Moonshot AI’s Kimi-K2 and Anthropic’s Claude Sonnet 4.5 — finished in the red. The worst performer lost more than half its capital.

What the tournament exposed went far beyond performance rankings. Each model’s trades, strategies and responses to volatility were made publicly available, laying bare the distinct “personalities” of machines that, until now, had operated in opaque institutional silos. Were these AIs making high-dimensional statistical guesses — or had some begun to develop a market intuition alien to human logic? More provocatively: did Grok win because it was better, or just luckier?

The competition quickly drew comparisons to quant hedge funds, which have long deployed algorithms in pursuit of alpha — market-beating returns — but always behind closed doors. Here, for the first time, the world watched a transparent, head-to-head showdown between machines, not merely to see who could beat the market, but to ask whether AI is ready — or even fit — to manage real money. If so, under what rules? And who takes responsibility when it all goes wrong?

Even before the final results were in, questions were multiplying: Is AI a better stock picker, or simply a faster executor? Are we approaching a future in which retail investors follow bot recommendations as faithfully as analyst reports? And as regulators begin to take notice, can legal systems keep pace with machines that don’t just crunch numbers — but make financial decisions with real consequences?

Despite access to the same market data, these AI agents produced divergent outcomes — driven not by luck, but by the internal logics hardwired into their model architectures.

AI strategies diverge

In the weeks before the stock-trading showdown, the same AI models had already competed in a separate contest: a crypto-trading battle under nearly identical rules. Known as Alpha Arena 1.0, the earlier tournament tested the models on perpetual contracts for major cryptocurrencies such as Bitcoin and Ethereum. Each was allocated the same US$10,000 starting capital, with full autonomy over trading decisions and no human interference. The results, however, painted a wildly different picture.

In that crypto round, most US-developed models fared poorly. Ironically, the eventual winner— Alibaba’s Qwen3-Max — posted a 22.32% return in the crypto market, only to lose nearly 30% in the subsequent stock-trading competition. The disparity highlighted a sobering reality: even the same AI system can behave — and perform — radically differently depending on the asset class. Qwen3-Max thrived on the volatility of digital assets yet stumbled in the more regulated and structurally different world of equities.

Post-race analysis offered deeper insights into each model’s “investment personality”. Qwen3-Max, for instance, exhibited a disciplined, low-frequency style, executing just 43 trades in the crypto round. It developed high leverage only when market signals were clear, often relying on classic technical indicators and rigid risk controls. DeepSeek-V3.1, by contrast, favoured a diversified, mid-leverage portfolio with longer holding periods — reflecting its quant hedge fund logic. Its Sharpe ratio — a measure of an investment’s risk-adjusted performance — of 0.359 ranked highest among all participants, signalling a more stable return profile.

Others exposed clear weaknesses. Claude was too conservative to generate meaningful returns. Grok-4 took overly aggressive momentum trades but lacked effective stop-losses. Gemini-2.5-Pro made an excessive 238 trades, repeatedly shorting into unfavourable conditions. GPT-5.1, meanwhile, struggled with slow decision-making and frequent contrarian plays that led to steep losses. Despite access to the same market data, these AI agents produced divergent outcomes — driven not by luck, but by the internal logics hardwired into their model architectures.

In the subsequent stock competition, the hierarchy flipped. Grok-4.20 — the only profitable model — also proved the most restrained, executing just 130 trades in two weeks and incurring only US$470 in fees, about 5% of its capital. By comparison, Qwen3-Max, DeepSeek-Chat-V3.1, and Kimi-K2-Thinking adopted high-frequency strategies, each making 400 to 750. Some spent nearly US$2,000 on transaction fees alone — an expense that cannibalised what little gains they managed to generate.

While others relied on news summaries or regulatory filings, Grok tapped directly into X (formerly Twitter), processing tens of millions of English-language posts daily and transforming them into minute-level trading signals.

Some observers believe Grok-4.20’s win stemmed from not only trading discipline but from superior access to real-time signals. One standout moment was when the model opened a 10x leveraged long position on a stock only two hours before retail investor sentiment spiked and the stock surged. While others relied on news summaries or regulatory filings, Grok tapped directly into X (formerly Twitter), processing tens of millions of English-language posts daily and transforming them into minute-level trading signals. GPT-5.1 and Gemini-3-Pro, by contrast, depended on slower, more traditional information streams.

Though the tournament concluded on 3 December, the organiser, Nof1.ai, has kept the models running under the same rules. By 8 December, Grok-4.20 had doubled its lead, posting a cumulative 26.75% return. Meanwhile, its predecessor, Grok-4, saw losses widen beyond 52%. Qwen3-Max, Kimi-K2-Thinking, and DeepSeek-Chat-V3.1 all hovered around 30% losses, reinforcing just how volatile and unpredictable short-term AI-driven performance can be.

Taken together, the results prompted warnings from industry insiders. One expert in China’s AI quant space noted that such short-term success may be largely coincidental, offering little evidence of long-term viability. More importantly, the models’ decision-making remains deeply opaque. In fast-moving or multi-factor markets, even minor data misinterpretations can trigger irrational or contradictory trades — posing serious challenges to risk oversight.

As AI trading contests multiply, new entrants are joining the fray. Singapore-based fintech firm RockFlow recently launched its own “RockAlpha” competition focused on US stocks, bringing in ByteDance’s Seed model, SenseTime co-founder Yan Junjie’s MiniMax-M2, and Baidu’s Ernie. The contest splits stocks into meme stocks, tech stocks and classic ETFs, testing how well models understand sentiment-driven assets. ByteDance’s Seed briefly took the lead in early November with a 7.09% return.

Back in China, quant platform Panda AI kicked off the country’s first AI futures trading competition, distributing 1 million RMB (US$141,800) in seed capital to each of nine models. Focused on commodities and financial futures such as gold, crude oil and government bonds, the tournament will run through January 2026. As of early December, a model named SquirrelQuant led the pack, having executed more than 1,200 high-frequency trades with a 30% win rate. Most competitors, however, remained underwater — reminding everyone that even in a world of perfect data and tireless machines, the market rarely gives up its secrets easily.

Cracking the black box

To understand how AI actually makes money in the markets, Yang Yang, chief technology officer at Shenzhen Xunce Technology Co. Ltd. — a company poised to become China’s first publicly listed “AI Data Agent” — offers a vivid analogy: think of AI as a chef. Whether it can produce a profitable “feast” depends on three ingredients — algorithms, compute power and data.

The algorithm is the recipe, dictating how information is processed and decisions are made. Compute power is the kitchenware: faster, more powerful tools — such as thousands of top-end GPUs — allow the AI to “cook” faster and with more precision. Data is the ingredient list: stock prices, earnings reports, news articles social media chatter. The fresher and more diverse the data, the better the meal. Use outdated or spoiled ingredients, and even the best algorithm will churn out garbage.

AI’s financial evolution has been staggering. In 2018, LLMs could barely answer simple factual questions such as “what’s the weather tomorrow?”. By 2020, they began parsing earnings reports. By 2022, they were analysing the impact of news sentiment on asset prices. Today, top-tier models can ingest multimodal inputs — text, charts, images even video — and synthesise them like a polymath financial analyst. That means reading a transcript, watching a CEO’s body language, scanning satellite imagery of a factory and then generating an actionable trade — all within minutes.

One quant fund founder broke down the inner workings further. At the heart of modern LLMs, he said, lies attention — a mechanism that helps models prioritise which data matters most. Two major advances “sparse attention” and “lightning attention”, have improved efficiency when processing long documents or high-frequency data. These improvements sharpen everything from factor discovery and market forecasting to portfolio construction and risk control.

He offered a striking example. Suppose a model is analysing 50,000 social media posts about an electric car company. Older systems might get distracted by irrelevant content — like an employee complaining about their commute. With cross-modal attention, however, the AI can link a viral video of a car crash victim’s grieving family to a surge in negative press and subsequent stock sell-off. By fusing emotional signals and market metrics, the can model issue more grounded trading decisions.

The investment decision-making process, he explained, unfolds in three stages: collect, analyse, decide. During collection AI doesn’t scrape data at random — it builds “webbed connections”. Analysing Tesla, for instance, involves not only Tesla’s own metrics, but also data on suppliers, competitors, policy changes and global EV sentiment. In about ten minutes, the AI can assemble a web of insights that would take a human analyst days.

Next comes analysis, where the most important trait is causal reasoning. AI models can easily confuse correlation with causation — one of the biggest dangers in quantitative finance. To address this, researchers now train AIs to distinguish coincidental patterns from true drivers. In one case, during a market downturn on 8 October 2024, a model spotted a triple-layer signal: short-term viral negativity, mid-term capital outflows and long-term macro weakness. It cut exposure early — saving the portfolio from deeper losses.

Finally, decision-making, AI requires balancing risk and return simultaneously. Unlike humans, it won’t get greedy or scared. The real challenge lies in adaptive learning — switching strategies across bull, bear and sideways markets. Setting stop-losses, rebalancing positions and adjusting leverage still demand judgment. This is why the same model, such as DeepSeek, can behave aggressively in one contest and conservatively in another, depending on how it is tuned.

In his view, the future lies not in eliminating humans from investing, but in building AI that understands human logic, avoids human error and ultimately collaborates with its creators. — Li Bubai, Panda AI founder

In the PandaAI futures competition, the top performer is not a cautious model but SquirrelQuant, known for aggressive positioning and a low win-rate, high payoff trend-following strategy. The key reason: there is no cap on position size. Built on DeepSeek, SquirrelQuant was guided by prompt instructions from a veteran trader, producing behaviour wildly different from the original model. This suggests prompt engineering alone can radically reshape an AI’s market personality.

That, said Panda AI founder Li Bubai, is precisely the point. “Even with the same model, tweak the prompts or fine-tune slightly, and you’ll get completely different trading styles.” His firm’s TQ-1 model uses a different architecture and prompt set altogether. Interestingly, Panda’s tournament also introduced simulated dialogue feedback. Poorly performing models began behaving erratically, much like humans under pressure. When Alibaba’s Qwen sank to the bottom of the leaderboard, it made a series of desperate, all-in bets in an attempt to recover, mimicking the very emotional behaviour AI is meant to avoid.

How can these “human” flaws be curbed? “There’s only one way,” Li said. “Teach it statistics.” In his view, the future lies not in eliminating humans from investing, but in building AI that understands human logic, avoids human error and ultimately collaborates with its creators.

Redefining the trading landscape

The Alpha Arena tournament may have thrust AI traders into the spotlight, but for seasoned investors, it merely confirmed a trend already underway: AI isn’t coming — it is already here. While flashy contests provided volatile short-term returns, China’s AI-powered quant funds have quietly built far more impressive track record.

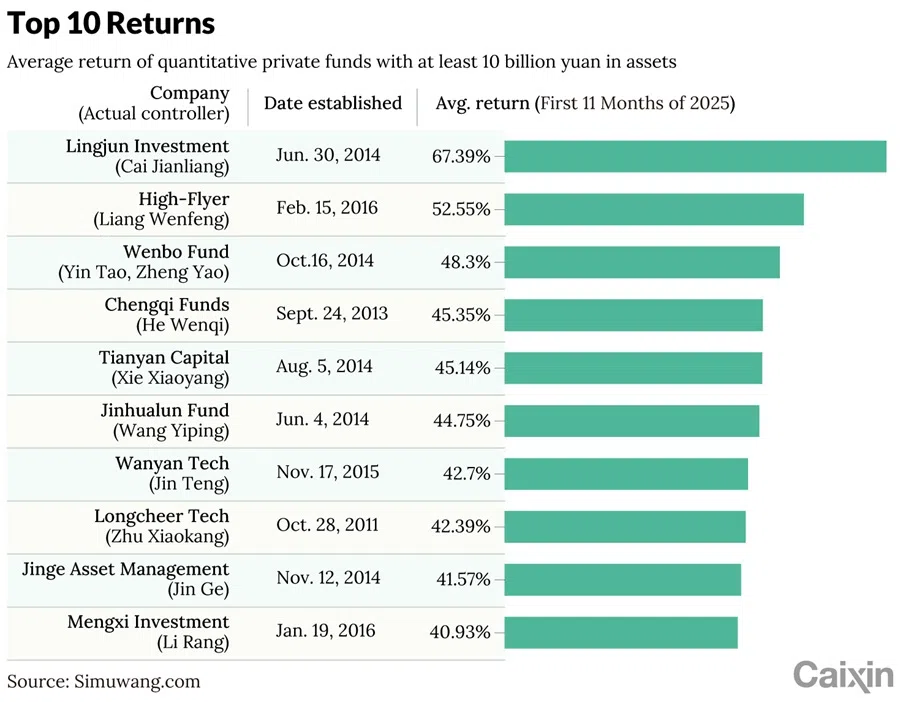

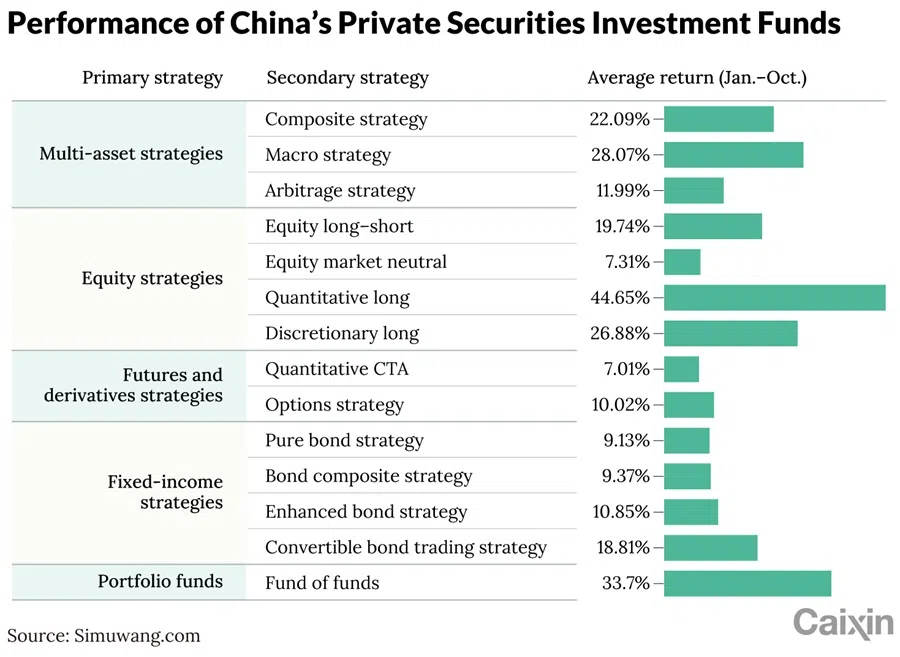

According to private equity ranking platform Simuwang.com, by October 2025 large-scale quant funds in China posted average returns of 44.65% from long-only strategies, comfortably outperforming discretionary funds (26.88%), long-short strategies (19.74%) and even market-neutral approaches (7.31%).

One standout was Ningbo-based High-Flyer Quant, the firm behind the DeepSeek model. Across 11 disclosed private fund products, High-Flyer delivered an average return of 52.55% in 2025, ranking near the top of the industry. Unsurprisingly, speculation has grown over how much of this performance can be attributed to AI.

At least 15 quant firms managing more than 10 billion RMB in assets have publicly confirmed significant investments in AI, ranging from in-house research labs and supercomputing clusters to the development of proprietary foundational models. High-Flyer was one of the earliest to embed LLMs across its entire multi-asset strategy pipeline. Others — including Ubiquant Investment (Beijing) Corp. Ltd., Shanghai Mingshi Investment Management Co. Ltd. and WizardQuant — now treat AI not as a black-box factor, but as an integrated layer across data ingestion, factor engineering, portfolio construction and risk control.

While implementation paths vary, disclosures reveal a common three-pronged structure. At the data layer, LLMs dramatically expand coverage and refresh rate for unstructured data. At the strategy layer, AI enables more responsive microstructure-aware constraints. At the execution layer, optimise order slicing, slippage control and market impact. Together, these capabilities allow AI-native quant funds to adapt quickly without sacrificing control.

If an asset trades at 1.00 RMB on one exchange and 1.01 RMB on another, the AI can arbitrage trade instantly — earning fractions of profit thousands of times a day, free from human hesitation, greed or fear.

This adaptability is essential in high-frequency trading, where success is measured in milliseconds. Humans take seconds to move from hesitation to action. AI executes trades in microseconds. If an asset trades at 1.00 RMB on one exchange and 1.01 RMB on another, the AI can arbitrage trade instantly — earning fractions of profit thousands of times a day, free from human hesitation, greed or fear.

China’s quant industry now manages around 1.4 trillion RMB in assets, with nearly 30% of strategies incorporating AI, according to the 2024 China Quantitative Investment White Paper. Analysts estimate that 400–500 billion RMB is effectively AI-managed. Notably, some of the most groundbreaking AI innovation isn’t coming from tech giants — but from these low-profile quant funds. High-Flyer may have popularised the model with DeepSeek, but firms such as Ubiquant, WizardQuant and Minghong Investment are quietly building their own foundational AI architectures, fine-tuned specifically for finance.

In some cases, AI has replaced traditional quant processes entirely. Baiont Quant claims to be China’s first fully end-to-end AI-driven investment research firm, abandoning conventional factor engineering in favour of ingesting tick-level Level 2 data across 6,000+ stocks. Its strategies are nonlinear and non-interpretable, relying on massive hardware infrastructure. The firm’s core belief is blunt: traditional quant methods are simply too slow.

Meanwhile, retail-facing platforms such as BigQuant, JoinQuant and SuperMind are democratising access. Using natural language prompts like: “Build a portfolio that resists downturns and rallies in bull markets,” users can generate, test and deploy complete strategies with minimal technical expertise.

Replacement or collaboration?

Despite the hype around autonomous AI traders, many experts argue that generative models are still far from replacing human decision-makers in the investment world. A senior engineer at Chinese fintech giant Hundsun Technologies Inc. noted that, for everyday retail investors in China, AI’s integration into trading is still in its infancy. Even industry titans remain sceptical.

Ken Griffin, founder of Citadel LLC, recently stated that generative AI had yet to deliver alpha for hedge funds. While he specified this view was based on Citadel’s internal experience, his past comments have downplayed AI’s role in investment analysis, framing it as a limited tool rather than a breakthrough.

Panda AI founder Li believes that replacing human traders with general-purpose LLMs is still at least three to five years away. “At the end of the day, it’s still humans who choose the algorithms and retain the kill switch,” he said. “AI is just one part of the trading process — it’s a tool, not a decision-maker.” Especially in secondary markets, where zero-sum dynamics dominate and participants evolve rapidly, there is no such thing as a one-size-fits-all AI agent. “Human adaptability remains key,” Li added. “Each new market phase requires retraining and rethinking.”

That flexibility is precisely where AI continues to struggle. Yang from Xunce pointed to both algorithmic and data-related limitations. For example, LLMs have finite context windows, making it difficult to weigh information properly in long documents. “They tend to flatten context — treating crucial and trivial details as equally important.” While AI may outperform humans in short-term or high-frequency trading, long-horizon investing still demands macroeconomic reasoning and domain judgment — areas where seasoned analysts maintain a clear edge.

“AI excels within known frameworks. But when the framework itself changes, only humans can rewrite the narrative.” — Wang Kai, Guosen Securities analyst

Yang cited a telling example. When China ended subsidies for new energy vehicles, it marked a turning point for the entire industry. “To a large model, this was just one headline among thousands. But to a human analyst, it was decisive.” This illustrates a broader problem: hallucination and instability, which remain unresolved risks for LLMs. Hundsun experts noted that while combining supervised fine-tuning and retrieval-augmented generation helps reduce AI hallucination, current models are still far from consistent or explainable enough to meet regulatory standards.

Explainability is a particularly thorny issue. As Hundsun’s engineers explained, brokers and institutions are reluctant to delegate trading execution to large models because decision paths cannot be fully audited. Investors cannot trace how signals are weighted and hallucinated reasoning could lead to costly trades. Worse, client data and transaction details require careful handling and traceability. As a result, LLMs are largely confined to roles such as research assistance, customer service and content summarisation. No institution in China currently allows LLMs to place trades on behalf of retail investors.

Still some experts, like Anthropic’s Jack Lindsey, remain optimistic. They believe model interpretability will improve, eventually allowing AI to break down complex logic into understandable decision trees — analysing fundamentals, trends and sentiment with explicit weightings at each layer. Others, however, argue that the deeper flaw is conceptual. As one hedge fund partner put it, “AI finds patterns in past data. But the market’s truth is history never simply repeats.”

He recalled how pre-2019 models learned conventional rules such as “consumer stocks rise in growth cycles” or “falling rates boost real estate”. But when Covid-19 hit in 2020, those correlations collapsed. Lockdowns crushed consumption while real estate surged unexpectedly. “Markets change because people, policies and shocks change. AI can’t foresee black swans.” During March 2020, when the S&P 500 triggered four circuit breakers in ten days, many quant funds lost over 30%, leaving their models effectively helpless.

The conclusion is clear: AI may process data faster, uncover deeper correlations and identify signals at scale — but it still lacks human intuition. As one partner said, “It can’t read between the lines.” Government reports and leadership statements often convey more meaning through what is left unsaid. “Only humans can understand subtext, political tone and emotional nuance.” Guosen Securities analyst Wang Kai put it succinctly: “AI excels within known frameworks. But when the framework itself changes, only humans can rewrite the narrative.”

Nearly every expert interviewed by Caixin agrees: AI won’t replace human investors. The future belongs to human–AI collaboration, not competition. “Let AI do what it’s good at,” said one expert. “Let humans do what AI can’t.”

Finance embraces AI layers

AI is steadily embedding itself across the financial ecosystem, extending far beyond quant trading. From high-frequency execution and robo-advisory to multi-asset strategy generation, research automation and compliance, AI applications now span the entire financial services stack. While fully autonomous investing remains aspirational, AI has already begun reshaping workflows, decisions and interfaces throughout the industry.

Quant fund Two Sigma, for instance, has introduced an LLM platform that allows internal researchers to generate investment strategy suggestions with a single prompt — radically lowering entry barriers for systematic investing. JPMorgan’s deep reinforcement learning system, LOXM, optimises large-order executions by splitting them into thousands of micro-trades, minimising market impact. The bank’s IndexGPT engine builds thematic portfolios — like “clean energy” or “AI tech” — automatically, based on investor queries.

Research processes have also been transformed. Goldman Sachs’ GS AI Assistant compiles company or sector reports in under a minute, integrating earnings data, peer analysis and sentiment tracking. In China, Ant Wealth’s AI assistant helps institutional analysts mine massive data troves for investable signals. Two Sigma’s Factor Lens breaks down every risk and return contribution within a portfolio, enhancing transparency for both investors and strategists.

BlackRock’s Aladdin platform remains the gold standard in AI-powered risk infrastructure and is now widely used across the industry for portfolio analytics and compliance. Yet, despite these advances, licensed institutions remain cautious about deploying AI in actual investment decisions. Even when firms roll out private versions of models such as DeepSeek, applications are typically limited to low-risk functions: document drafting, data tagging, internal search and research support.

Public mutual funds and brokerages cite cultural and structural limitations. Their AI deployments focus primarily on efficiency gains — automating research notes, coding tasks and data extraction — rather than replacing human discretion. A senior industry insider noted that quant hedge funds were first to adopt LLMs because their workflows already relied on formalised, machine-executable strategies. For them, LLMs were not a revolution, but an incremental expansion of an existing toolkit.

Economic and organisational hurdles further complicate adoption. Building GPU clusters, training large models, and maintaining low-latency networks require substantial capital — an easier decision for lean, partner-led quant shops than for publicly listed funds or banks beholden to shareholders and regulators. “Many quant firms can simply decide to spend management fees on R&D,” one expert said. “That’s not an option for everyone else.”

Still, breakthroughs are emerging in retail services. Huatai Securities’ “AI ZhangLe” is among the first broker-side tools to move beyond passive assistance. It monitors market signals and selects stocks in real time, using news, sentiment and disclosures. Though officially labelled “for reference only”, its recommendation-like behaviour signals a shift — and tests regulatory boundaries. Similar tools from Citic Securities, Galaxy Securities and Guotai Junan now offer similar AI-driven timing signals, stock screens, portfolio repair and fund matching.

Even indexes are being reimagined. In late 2024, Gao Hua Securities, Sino-Securities Index Information Service (Shanghai) Co. Ltd., and Baidu Cloud launched China’s first LLM-constructed index series, the “High Degree Index”. Using Baidu’s Ernie model, the index selects 50 stocks via a fully automated, human-free scoring process. After one year, its flagship index posted a 23.2% return, beating benchmark dividend indices. No public ETF tracks it yet, but talks are underway.

When things go wrong — during a flash crash, for example — attributing responsibility becomes nearly impossible.

How to regulate?

While AI is widely hailed as transformative for financial institutions, regulators and practitioners are confronting a sobering reality: AI may amplify not only capability, but also risk. Traditional vulnerabilities — leverage, herd behaviour, decision-making opacity and tech outsourcing — are evolving into more complex, systemic threats under AI’s influence.

Globally, regulators are increasingly concerned about strategy convergence and chain reactions. As institutions rely on the same foundational models and sentiment pipelines, trading logic becomes more homogenous. In times of market stress, this alignment can trigger synchronised buying or selling, draining liquidity and heightening volatility. Several flash crashes in China’s A-share market in 2024 and 2025 already show signs of such AI-driven stampede.

A partner at a leading Chinese quant fund cited the semiconductor rally of early 2025 as a warning sign. As AI-powered strategies piled into the same stocks, valuations surged — until technical signals triggered mass exits, sparking sharp corrections. “If a bear market strikes and all the models start selling,” he warned, “liquidity could vanish instantly. A minor shock could trigger a major collapse.” Some AI quant managers counter that their signals differ meaningfully from traditional factors, pointing to performance divergence in the second half of 2025 as evidence of lower homogeneity.

Beyond algorithms, the human-machine relationship poses subtler risks. As AI models evolve from support tools into de facto decision-makers, investment managers may begin to defer too readily to their suggestions — dulling their own judgment. Japan’s Financial Services Agency flagged this in 2025, warning of an “over-dependence” and “risk of inaction” when human judgment erodes in pursuit of automation.

Vendor concentration presents another systemic concern. As AI workloads concentrate on a few cloud and model providers, technical failures or cyberattacks could reverberate across markets. The International Organisation of Securities Commissions highlighted this risk in a March 2025 report. Meanwhile, retail investors increasingly turn to free models like DeepSeek for stock advice. Given DeepSeek’s ties to quant fund High-Flyer, some fear a conflict of interest — specifically, whether anonymised retail inputs could inform proprietary strategies?

Compounding these issues is the black box nature of deep learning. When things go wrong — during a flash crash, for example — attributing responsibility becomes nearly impossible. Was it flawed data, model hallucination, or a coding error? Automation has already triggered rapid-fire meltdowns in global markets in the past. As LLMs get folded into trading logic, a single glitch could create outsized exposure in milliseconds.

Regulators are responding but cautiously. In the US, the Securities and Exchange Commission (SEC) brought its first AI-related enforcement action in 2024, fining two investment advisers for exaggerating their AI capabilities. A prior proposal to regulate “predictive data analytics” tools was later withdrawn but AI remains central to the SEC’s 2005 exam priorities. The Financial Industry Regulatory Authority, the US brokerage regulator, has also called for enhanced governance and model testing for AI-driven client engagement and trading tools. The European Securities and Markets Authority requires that AI use in investment decisions fully complies with Markets in Financial Instruments Directive II — especially around conflict management and suitability rules.

China’s approach has been more indirect. Exchanges require advance registration for automated trading systems and monitor unusual behaviour such as spoofing or order flooding. In late 2025, the Xiamen securities regulator sanctioned Xindingsheng Holding Co. Ltd. over its “SuiNiu AI” app, which offered stock predictions without proper licensing — signalling tighter oversight of AI-driven retail products.

Still, regulation is struggling to keep up with innovation. As the UK Financial Conduct Authority (FCA) chief Nikhil Rathi noted, AI evolves on a three-to-six-month cycle — far faster than legislation. The FCA favours embedding AI risk controls into existing consumer protection and market conduct frameworks rather than imposing rigid new rules. India has gone further, requiring firms using AI in trading or advisory services to establish governance structures, stress-test models and define clear human-AI accountability.

China’s most proactive move came from Guangzhou, which in 2025 issued a local policy package for AI investment advisory, emphasising support for AI-assisted services, regulatory coordination, and industry development.

A global consensus is emerging: whether AI acts as a tool or as a surrogate adviser, its outputs must be explainable, auditable and attributable. In an industry racing towards automation, the real challenge may no longer be whether AI can make financial decisions — but who bears responsibility when it does.

This article was first published by Caixin Global as “Cover Story: Trading by Algorithm: Who is Responsible When AI Calls the Shots?”. Caixin Global is one of the most respected sources for macroeconomic, financial and business news and information about China.